Happy Sunday, and welcome to AI ThreatScape!

In today's edition, we dive into:

Disinformation: China-linked disinformation targets Canadian politicians

Deepfake: Why Is the Zambian President Not Seeking Re-Election in 2026?

Statistic: How many millennials are using AI assistants to pay bills?

Data Privacy: U.S. Immigration Using AI-Powered Tool to Scan Social Media Before Granting Visa

Regulation: White House All Set to Issue Executive Order to Monitor AI Risks

DISINFORMATION

China-linked Disinformation Targets Canadian Politicians

A disinformation campaign targeting Canadian politicians, including Prime Minister Justin Trudeau has been identified by ASPI (Australian Strategic Policy Institute).

According to ASPI, the campaign began on August 7. Around 2,000 inauthentic social media accounts published more than 15,000 posts in English, French and Mandarin on X, Facebook and YouTube. The campaign subsided post September 11, four days after the Canadian government announced an inquiry into foreign interference.

This campaign adopted ‘spamouflage’, a disinformation tactic in which a network of inauthentic social media accounts are used to post and amplify propaganda messages across various social media platforms.

In this particular disinformation campaign, around 50 MPs, including the Prime Minister, were targeted. They were accused of being corrupt, racist and having extramarital affairs.

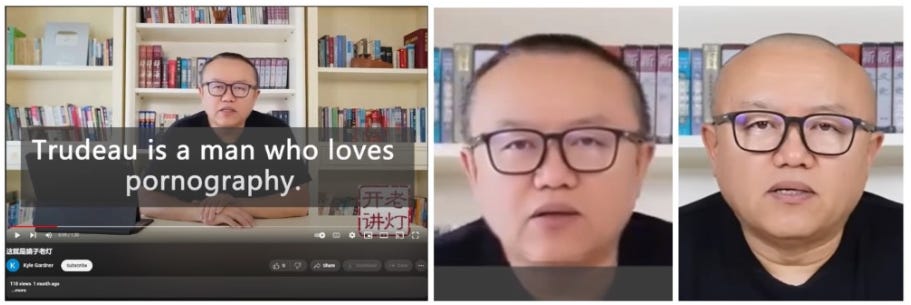

Most notably, this disinformation campaign used sophisticated deepfake technology. Here are a couple of examples:

A deepfake video of a popular political vlogger, Liu Xin, who is of Chinese heritage and based in Canada has been used. In the video, Liu can be seen (and heard) accusing the Canadian politicians of corruption, racism and philandering. However, this video is a deepfake. Liu has not made these allegations.

Source: ASPI

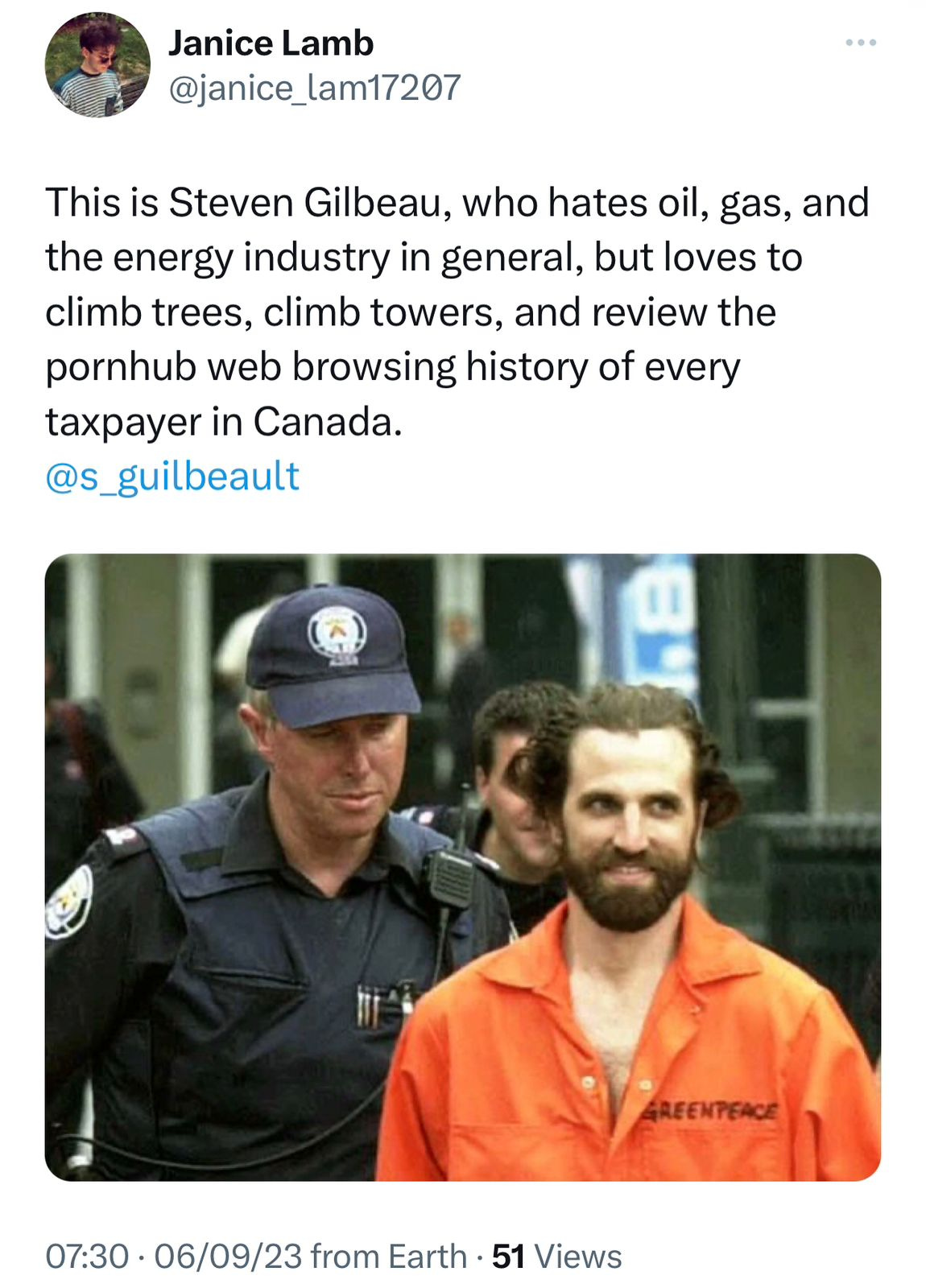

In another post on X, Steven Guilbeault, Canada’s environment and climate change minister is shown as being arrested with a malicious allegation accompanying it.

Source: X

The impact of this disinformation campaign has been low to negligible since the campaign was unable to get much of a response from genuine users. The engagement remained within the inauthentic network.

Why Does This Matter?

Although the campaign did not receive the desired engagement, ASPI assesses that the objective of this China-linked campaign was possibly to harass and intimidate its targets, and not generate mass engagement.

CCP has been strongly focussed on using this type of digital transnational repression. It is likely to continue to target entities it deems as its adversaries using this technique.

Recent reports have indicated that China has upped the ante on its disinformation campaigns against its adversaries. A major tool which has been aiding its disinformation operations is generative-AI, and its usage is only likely to see an uptick.

DEEPFAKE

Why Is the Zambian President Not Seeking Re-Election in 2026?

A video of Zambian President Hikainde Hichilema surfaced in which he announced his decision to not run for the presidency in 2026. Hichilema was elected to office in 2021 after defeating the former leader, Edgar Lungu.

There have been speculations that Lungu, who retired from politics following his defeat in 2021, will make a comeback. According to Zambia’s constitution, a President is allowed two terms of five years each.

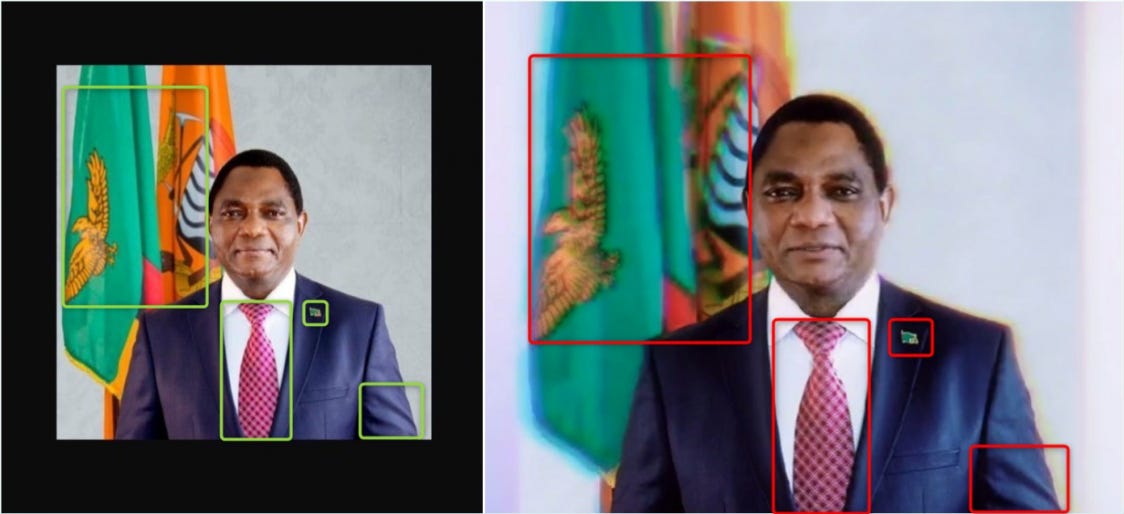

The video which surfaced on X and TikTok, was analysed by experts who have concluded that the video was AI-generated.

Source: AFP

Telltale signs which led to the conclusion are:

Video Quality: The video was slightly blurry and colours appear to be filtered. When official announcements are released, the media is usually of high quality.

Unnatural Still Body: Throughout the video, Hichilema’s upper body and neck remain unusually still. This is a sign of AI-generation when only the head and face are AI-generated.

Matching Attire & Background: A reverse image search showed that the image was on Hichilema’s official X account. The same suit worn by Hichilema and the same background can be seen in the AI-generated content too.

Source: AFP

Odd Facial Movement: While the audio has been synced with the facial movements, experts say that those facial movements are not natural. Also, there are several individual frames in which the teeth are misformed.

Why Does This Matter?

While the United Party for National Development (UNDP), the ruling party, has alleged that this video was the job of the opposition, it hasn’t been proven yet. However, if the motive for the creation of such a video is to be assessed, one can see that it’s possibly to cause confusion and sow mistrust.

Given the ease and extremely low cost at which deepfake content can be produced, more such deepfakes should be expected. Especially in the lead-up to elections, the use of AI-generated content to target opposition leaders has seen an uptick. This trend is likely to continue.

It is yet to be determined if AI-generated deepfakes are able to have a significant influence over election results. However, with several crucial elections on the horizon, including the U.S. Presidential elections in 2024, it is likely that we will begin to understand the true impact of AI-generated deepfakes.

STATISTIC

30%

Percentage of U.S. millennials who use voice assistants to pay their bills.

DATA PRIVACY

U.S. Immigration Using AI-Powered Tool to Scan Social Media Before Granting Visa

The U.S. Immigration and Customs Enforcement (ICE) agency, has reportedly been using an AI-powered tool to scan social media posts of individuals of interest. This AI tool called Giant Oak Search Technology (GOST), is used for identifying individuals who may pose a ‘risk’ to the United States based on their social media content.

Once the social media content is reviewed, the system issues a score for the target individual. Following the scoring, ICE can decide whether the person has to be granted a visa or not.

Analysts are able to view the target’s social media profiles and see their social graph to understand who they are potentially connected with.

However, the use of this tool is not a new development. The Department of Homeland Security has been using this tool since 2014, while ICE has paid over $10 million to GOST since 2017.

Why Does This Matter?

An AI-powered tool like GOST empowers its users with the ability to gain a lot of insights into a target's life. Imagine someone being able to understand who you are connected with, monitoring your movements, understanding patterns, understanding your likes, dislikes, sentiments etc.

The manner in which the information collected is utilised by ICE or the other agencies using GOST, is only known to them. Such a tool can be potent when used with the right intent and in an unbiased manner. However, while the tool is AI-powered, it is used by humans to support specific objectives. Most times humans are biased. ICE’s decision making which is enabled by GOST, is vulnerable to bias, which may be causing a number of wrong decisions.

REGULATION

White House All Set to Issue Executive Order to Manage AI Risks

President Joe Biden is likely to sign a comprehensive executive order on Monday. This order is meant to govern how federal agencies use AI.

Some of the objectives that the government intends to achieve through this order are:

Promote safe and responsible deployment of AI across government agencies.

Issue guidelines to federal contractors on preventing discrimination in AI-powered hiring systems.

Introduce guardrails requiring disclosures on how AI systems would be used to collect or use citizen’s information.

Crackdown on generative AI-associated harms by identifying tools to track, authenticate, label and audit AI-generated content.

Prevent the spread of AI-generated child sexual abuse material (CSAM) and non-consensual intimate images of individuals.

Address numerous AI risks, especially those pertaining to cybersecurity, defense, health, labour, energy, education, public benefits etc.

Address bias caused by the use of AI for loan underwriting.

The various agencies would be given anywhere between 90 - 240 days to fulfil the requirements of the order.

Why Does This Matter?

The government has been accused of being slow in addressing AI-related risks. While there have been attempts to introduce guardrails to ensure that AI development is happening in a responsible manner, the implementation has been questionable.

With this order, the government will not only be able to monitor and control the way AI is being used, but being the largest customer to the major AI companies, the government will be in a position to dictate a number of terms on the way AI is being developed within these companies.

If the implementation of the executive order takes place in the manner intended, this order could possibly play a major role in mitigating a number of serious AI risks within the United States. The impact of this executive order will be understood over the next few months.

Wrapping Up

That’s a wrap for this edition of AI ThreatScape!

If you enjoyed reading this edition, please consider subscribing and sharing it with someone you think could benefit from reading AI ThreatScape!

And, if you’re already a subscriber, I’m truly grateful for your support!